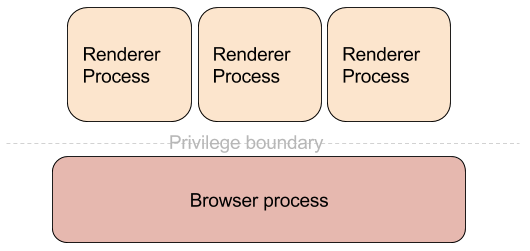

One of the main pieces of functionality in a browser is navigation. It is the process through which the user gets to load documents. Let us trace the life of a navigation from the time an URL is typed in the URL bar and the web page is completely loaded. In this post I will be using the word “browser” to describe the program the user sees and not jus the browser process, which is the privileged one in Chromium’s security model.

The first step is to execute the beforeunload event handler if a document is already loaded. It allows the page to prompt the user whether they want to leave the current one. It is useful in cases such as forms, where the result has not been submitted, so the form data is not lost when moving to a new document. The user can cancel the navigation and no more work will be performed.

If there is no beforeunload handler registered or the user agreed to proceed, the next step is the browser making a network request to the specified URL to retrieve the contents of the document to be rendered. Chromium’s implementation uses the term “provisional load” to describe the state it is in at the start of the network request. Assuming no network level error is encountered (e.g. DNS resolution error, socket connection timeout, etc.), server responds with data and the response headers come first. Once the headers are parsed, they give enough information to determine what needs to be done next.

The HTTP response code allows the browser to know whether one of these conditions has occured:

- A successful response follows (2xx)

- A redirect has been encountered (response 3xx)

- An HTTP level error has occurred (response 4xx, 5xx)

There are two cases where a navigation can complete without resulting in a new document being rendered. The first one is HTTP response code 204 and 205, which tell the browser that the response was successful, but there is no content that follows, therefore the current document must remain active. The other case is when the server responds with a header indicating that the response must be treated as a download. All the data read by the browser is then saved to the local filesystem based on the browser configuration.

The server can also sent a redirect, upon which the browser makes another request based on the HTTP response code and the additional headers. It continues following redirects until either an error or success is encountered.

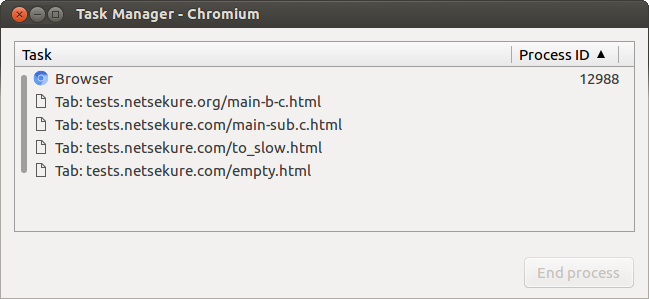

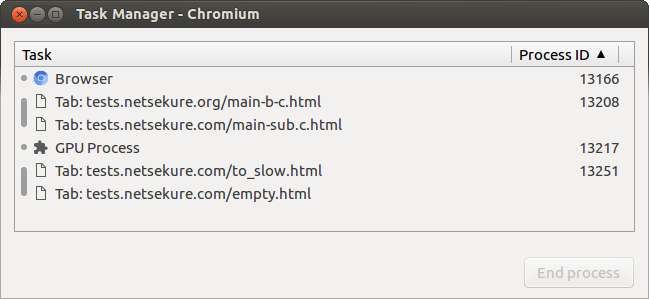

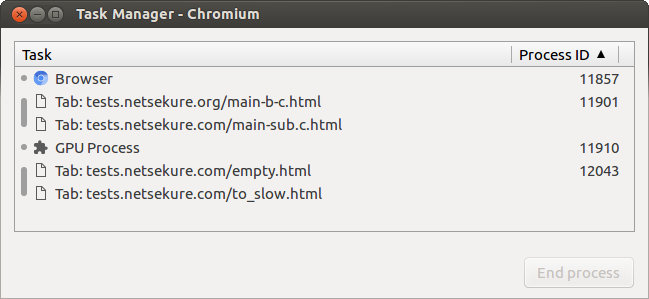

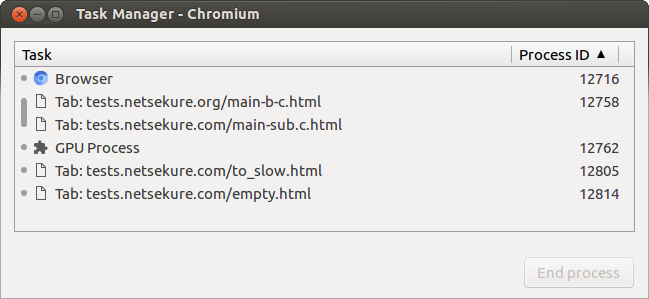

Once there are no more redirects, if the response is not a 204/205 or a download, the browser reads a small chunk of the actual response data that the server has sent. By default this is used to perform MIME type sniffing, to determine what type of response the server has sent. This behavior can be suppressed by sending a “X-Content-Type-Options: nosniff” header as part of the response headers. At this point the browser is ready to switch to rendering the new document. In Chromium’s implementation, this term used for this point in time is “commit”. Basically the browser has committed to rendering the new document and remove the old one.

However, before the commit is performed, the old document needs to be notified that it is going away, so the browser executes the unload event handler of the old document, if one is registered. Once that is complete, the old document is no longer active, the new document is committed, and in strict terms, the navigation is complete.

The astute reader will realize that even though I said navigation is complete, the user actually doesn’t see anything at this point. Even though most people use the word navigation to describe the act of moving from one page to another, I think of that process as consisting of two phases. So far I have described the navigation phase and once the navigation has been committed, the browser moves into the loading phase. It consists of reading the remaining response data from the server, parsing it, rendering the document so it is visible to the user, executing any script accompanying it, as well as loading any subresources specified by the document. The main reason for splitting it into those two phases is how errors are handled.

This brings us back to the case where the server responds with an error code. When this happens, the browser still commits a new document, but that document is an error page it either generates based on the HTTP response code or reads as the response data from the server. On the other hand, if a successful navigation has committed a real document from the server and has moved to the loading phase it is still possible to encounter an error, for example a network connection can be terminated or times out. In that case the browser is displaying as much of the new document as it has parsed.

Chromium exposes the various stages of navigation and document loading through methods on the WebContentsObserver interfce.

Navigation

- DidStartNavigation - invoked at the point after executing the beforeunload event handler and before making the initial network request.

- DidRedirectNavigation - invoked every time a server redirect is encountered.

- ReadyToCommitNavigation - invoked at the time the browser has determined that it will commit the navigation.

- DidFinishNavigation - invoked once the navigation has committed. It can be either an error page if the server responded with an error code or the browser has switched to the loading phase for the new document on successful response.

Document loading

- DidStartLoading - invoked when a navigation is about to start, after executing the beforeunload handler.

- DocumentLoadedInFrame - invoked when the document itself has completed loading, however it does not mean that all subresources have completed loading.

- DidFinishLoad - invoked when the document and all of its subresources have been loaded.

- DidStopLoading - invoked when the document, all of its subresources, all subframes and their subresources have completed loading.

- DidFailLoad - invoken when the document load failed, for example due to network connection termination before reading all of the response data.

Hopefully this post gives a good introduction to navigations in the browser and should be a good base to build on for future posts.