I have seen many people versed in technology and security make incorrect statements about how Chromium’s multi-process architecture works. The most common misconception is that each tab gets a different process. In reality, it is somewhat true, but not quite. Chromium supports a few different modes of operation and depending on the policy in effect, process allocation is done differently.

I decided to write up an explanation of the default process model and how it actually works. The goal is for it to be comprehensible to as many people as possible, not requiring a degree in Computer Science. However, basic familiarity with the web platform (HTML/JS) is expected. In order to get to it, there are some concepts that need to be defined, so this is the first post in a series, which will explain some of Chromium’s internals and demystify some parts of the HTML spec.

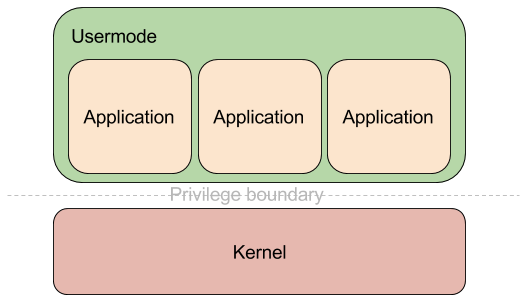

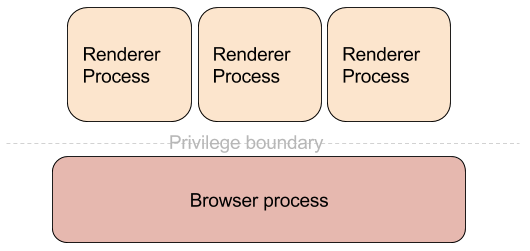

I have found the easiest mental model of the Chromium architecture to be that of an operating system - a kernel running in high privilege level and a number of less privileged usermode application processes. In addition, the usermode processes are isolated from each other in terms of address space and execution context.

The equivalent of the kernel is the main process, which we call the “browser process”. It runs with the privileges of the underlying OS user account and handles all operations that require regular user permissions - communication over the network, displaying UI, rendering, processing user input, writing files to disk, etc. The equivalent of the usermode processes are the various types of processes that Chromium’s security model supports. The most common ones are:

- Renderer process - used for parsing and rendering web content using the Blink rendering engine

- GPU process - used for communicating with the GPU driver of the underlying operating system

- Utility process - used for performing untrusted operations, such as parsing untrusted data

- Plugin process - used for running plugins

They all run in a sandboxed environment and are as locked down as possible for the functionality they perform.

In the modern operating systems design, the principle of least privilege is key and separation between different user accounts is fundamental. User account is a basic unit of separation and I would refer to from here on to this concept as “security principal”. Each operating system has a different way of representing security principals, for example UIDs in Unix and SIDs in Windows, etc. On the web, the security principal is the origin - the combination of the scheme, host, and port of the URL the document has originated from. Access control on the web is governed by the Same Origin Policy (SOP), which allows documents that belong to the same origin to communicate directly with each other and access each other synchronously. Two documents that do not belong to the same origin cannot access each other directly and can only communicate asynchronously, usually through the postMessage API. Overall, the same origin policy has worked very well for the web, but it also has some quirks, which make it unsuitable to treat origins as the security principal for Chromium.

The first reason comes from the HTML specification itself. It allows documents to “relax” its origins for the purpose of evaluating same origin policy. Since the origin contains the full domain of the host serving the document, it can be a subdomain, for example “foo.bar.example.com”. In most cases, however, the example.com domain has full control over all of its subdomains and when documents that belong in separate subdomains want to communicate directly, they are not allowed due to the restrictions of same origin policy. To allow this scenario to work, though, documents are allowed to change their domain for the purposes of evaluating SOP. In the case above, “foo.bar.example.com” can relax its domain up to example.com, which would allow any document on example.com itself to communicate with it. This is achieved through the “domain” property of the document object. It does come with restrictions though.

In order to understand the restrictions of what document.domain can be set to, one needs to know about the Public Suffix List and how it fits in the security model of the web. Top-level domains like “com”, “net”, “uk”, etc., are treated specially and no content can (should) be hosted on those. Each subdomain of a top-level domain can be registered by different entity and therefore must be treated as completely separate. There are cases, however, where they aren’t a top-level domain, but still act as such. An example would be “co.uk”, which serves as a parent domain for commercial entities in the UK to register their domains. Because those cases are effectively in the role of a top-level domain, but are not one, the public suffix list exists as a comprehensive source for browsers and other software to use.

Now that we know about PSL, let’s get back to document.domain. A document cannot change its domain to be anything completely generic or very encompassing, such as “.”. Browsers allow documents to relax their domain up the DNS hierarchy. To use the example from above, “foo.bar.example.com” can set its domain to “bar.example.com” or “example.com”. However, since “.com” is a top-level domain, allowing the document to set its domain to “.com” will lead to security problems. It will allow the document to potentially access documents from any other “.com” domain. Therefore browsers disallow setting the domain to any value in the Public Suffix List and enforce that it must be a valid format of a domain under one of the entries in the PSL. This concept is often referred to as “eTLD+1” - effective top-level domain (a.k.a. entry in the PSL) + one level of domains under it. I will use this naming for brevity from here on.

It is this behavior defined by the HTML spec allowing documents to change their origins that gives us one of the reasons we cannot use the origin as a security principal in our model. It can change in runtime and security decisions made in earlier point in time might no longer be valid. The consistent part that can be taken from the origin is only the eTLD+1 part.

The next oddity of the web is the concept of cookies. It is quite possibly the single most used feature of the web today, but it has its fair share of strange behaviors and brings numerous security problems with it. The problems stem from the fact that cookies don’t really play very well with origins. Recall that origin is the tuple (scheme, host, port), right? The spec however is pretty clear that “Cookies do not provide isolation by port”. But that isn’t all, the spec goes to the next paragraph and says “Cookies do not provide isolation by scheme”. This part has been patched up as the web has evolved though and the notion of “Secure” attribute on cookies was introduced. It marks cookies as available only to hosts running over HTTPS and since HTTP is the other most used protocol on the web, the scheme of an origin is somewhat better isolated and port numbers are completely ignored when cookies are concerned. So basically it is impossible to use origin as a security principal to use and perform access controls against cookie storage.

Finally there is enough background to understand the security principal used by Chromium - site. It is defined as the combination of scheme and the eTLD+1 part of the host. Subdomains and port numbers are ignored. In the case of https://foo.bar.example.com:2341 the effective site for it will be https://example.com. This allows us to perform access control in a web compatible way while still providing a granular level of isolation.